Warning: Another TL;DR. Let me give you a quick history on this special case winning against Google Panda after three (3) long years. Yes, three long years!

Background: January 2011, I’ve got a call from a US-based company and was eventually consulted for SEO.

The Company’s Situation:

1. Historical data via Google Analytics showed a downtrend in overall traffic across more than 10 of their sites. Google Analytics data showed a consistent year-round slow traffic drop.

2. More than 10 sites they own have duplicate content. Worth mentioning too, that they have about hundreds of thousand duplicate pages and all domains are EMDs (Exact-Match Domains).

3. Bounce Rates across all sites were on the average of 90% and up. Main logo of the different legacy websites were all the same and were all linked to their main website.

4. Sub-domain migration was already made prior the consultation. They used the sub-domains approach to replace some of their legacy sites.

5. Zero knowledge in SEO. Technical SEO issues such as sitemap submission, canonicalization, duplicate content, proper site migration, 301 redirect, schema, and whole lot more were just random ideas for them.

6. The use of their brand company logo for all legacy sites was due to their “re-brand”.

7. Google cached their pages erroneously that showed different domain URLs across their sites.

Important Google Updates within this date:

Overstock.com Penalty — January 2011: In a rare turn of events, a public outing of shady SEO practices by Overstock.com resulted in a very public Google penalty. JCPenney was hit with a penalty in February for a similar bad behavior. Both situations represented a shift in Google’s attitude and foreshadowed the Panda update.

Attribution Update — January 28, 2011: In response to high-profile spam cases, Google rolled out an update to help better sort out content attribution and stop scrapers. According to Matt Cutts, this affected about 2% of queries. It was a clear precursor to the Panda updates.

Panda/Farmer — February 23, 2011: A major algorithm update hit sites hard, affecting up to 12% of search results (a number that came directly from Google). Panda seemed to crack down on thin content, content farms, sites with high ad-to-content ratios, and a number of other quality issues. Panda rolled out over at least a couple of months, hitting Europe in April 2011.

Source: Moz.com

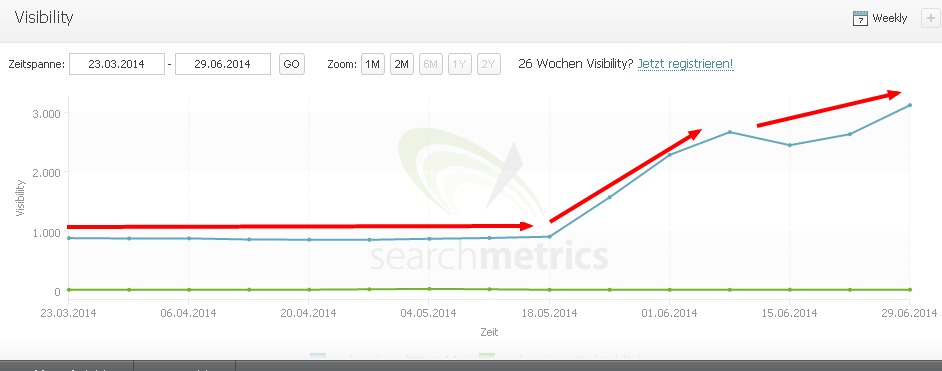

Fast forward… After three years battle with Google, the website recovered.  While many SEOs call the latest Google Panda 4.0 as a softening update, it did not matter. The client finally saw the light! Have they been lucky? Yes and No.

While many SEOs call the latest Google Panda 4.0 as a softening update, it did not matter. The client finally saw the light! Have they been lucky? Yes and No.

But why it took them 3 years to recover? I believe it was not only on the issue with Google Panda that delayed the recovery, but more on OTHER THINGS.

Here’s Why:

Here’s Why:

1. Not 100% Buy-in from Top Management.

The consolidation of all legacy websites under one roof or domain has been argued among the top management due to the past glory years of those keyword rich domains. Given the situation on duplicate content, massive cascade on traffic due to Panda algorithm, and their blurred branding effort, we recommended the migration to only one branded domain.

Reason: Consolidation of the pages initially addressed duplicate content issues and made all marketing efforts focused on a single brand.

Tip: Some notes on Duplicate Content can be found at Google Webmaster Tools Help page. Click here.

2. Moving Target on Implementation of SEO Recommendations.

As consultants, we were limited by how the recommendations were carried out. There were many developers and designers on the client’s side; however, slow task delegations dragged the entire process. Imagine a 3-4 months delay before any SEO recommendation was carried out was simply unacceptable. This led to delayed implementation of SEO audit suggestions.

3. No Dedicated Tech/IT and UX Team.

Working on a client whose team were spread too thinly and manning many smaller projects made the SEO project worse. For example, the developer who used to work on the SEO tasks was at the same time, the server admin and more! One server admin to man other database tasks is just taxing given the size of the website/s involved. This is just plain bad project management.

4. Lack of Ready Content Team.

This item led to long debates with the top management. The suggested shift of the website to content-based type to serve its visitors better was taken somehow differently since it might impact the revenue model of the business being a directory website.

5. Follow Top Management’s Whims at the Expense of Ideal SEO Implementations.

Our SEO implementations may be ideal but the management didn’t see it feasible because a lot of those pages were “untouchables” for fear of having more traffic losses by the management. Interim solutions were done but were not really helpful at all. We’ve recommended killing a lot of those duplicate pages (many times in the past) but were not carried out until 2014.

To cut the story short, an in-house SEO was hired to oversee SEO implementations. However; due to the large tasks thrown at him from data analysis and other major tasks, a lot of the recommendations we’ve made were not done despite his being good at what he does best as SEO. Many of those SEO To-Dos were on-page and off-page which required more manpower. The problem persisted in 2012.

Glad that in 2013, the operations manager, dedicated tech team, database expert, project manager, SEO team, and server admin were more proactive and faster than before. This is the huge improvement made over the years. Efficiency was way better.

Finally, we reached and tackled…

6. Bad Information Architecture, User Experience Design and Slow Database Issues.

We looked at everything from Information Architecture to User Experience Design. We drilled further into the database design and slow DB queries which were causes of multiple server outages.

Worth noting too, due to the good support and persistence of middle management, the big boss finally gave in (somehow?). The company invested in creating quality content meant for users. Dedicated tech team, and in-house SEO team were formed to finally combat Google Panda. The project manager also made sure things rolled as planned and on-time.

Personally, I am happy that our SEO recommendations up until now still serve as their basis (at least) for moving forward by making sure that the website has well-balanced quality content all throughout its important pages. We’ve also helped their managers and tech teams on how SEO know-how integrates to their daily tasks. Although the company still has a lot of things to do in order to regain leadership in their industry via search, its future is now clearer after more than 3 years in the gray area.  Key Takeaways

Key Takeaways

1. Before taking any SEO project, make sure that there’s a total buy-in among top management. They should believe in SEO. Make it clear to the decision makers what’s in it for the company (on a larger scale) to implement SEO best practices.

2. Ascertain that you have a ready team of content marketers, project managers, server admins, software developers, and UX designers.

UX Design, Server Reliability, and Top-notch Content which primarily improve user experience on the website are large factors that we can attribute to Google Panda recovery. Here are guidelines that you can use to educate your clients in building high quality sites.

3. Delayed Project equals Big Revenue Loss.

Self-explanatory. Adherence to timeline is second to none.

4. Always educate the company you work with on how to properly conduct SEO.

You may or may not retain the client but you’ll always feel better by giving them the right direction or framework in order to improve their brand visibility online. That’s the real VALUE.

5. Soft skills is super important.

Dealing with different stakeholders of the company you work with is key in moving the needle of success faster. Software developers, business people, and creatives have different mindsets and mood swings. Learning to deal with them properly is gold.

6. Dilly dallying is a No-no.

Top management needs to understand that dilly dallying on decisions negatively impacts the bottom line. Teams down the line become frustrated too.

7. Talk about bottom line most (all) of the time with the bosses.

The magic bean to get top management’s attention is to make sure that discussions revolve around the impact of the project on the company’s bottom line .

Just a little warning though, when you get on-board in times of panic mode just like when the company is hit by Google Panda, you’ll have to spill your guts out in explaining to the stakeholders on why they really have to fix the issue as fast as they can. Be ready.

Tip: An income projection PowerPoint slide can get most top management into total buy-in. Don’t scare them. Simply present the cause and effect of the project in a manner that they can easily understand. Talk dollars and pounds. =)

One response to “So the Boss Gave In and We Won Against Google Panda 4.0”

I feel ya, Gary. In my experience, bigger companies are really tough to work with when you’re proposing content and architecture revamps. Lots of butthurt IT people out there who don’t want meddling SEOs picking apart their unoptimized work. Congrats on winning the long war. 🙂